More-than-human Design

NOW

Special issue in HCI Journal “Posthumanist HCI and the non-human-turn in design”

Special Issue Editors: Elisa Giaccardi, Delft University of Technology, The Netherlands; Johan Redström, Umeä Institute of Design, Sweden; Iohanna Nicenboim, Delft University of Technology, The Netherlands

Topics of Interest

• Artificial agency and co-performativity

• The interface as a site of reconfiguration

• Decentering design methodologies

• Entanglement and design experiments

• Ethics and aesthetics of posthumanist participation

• Pedagogies of nonrepresentational design

• Socio-economic and political redirections Timing

Proposals due: 15th September 2022

Full papers due: 15th January 2023

Final papers due: 1st September 2023

https://t.co/xNh01D6ZSm

-----

May 2nd 21:15 CEST - CHI

Panel “More-than-human Concepts, Methodologies, and Practices in HCI”

Hosted by: Aykut Coskun, Nazli Cila, Iohanna NicenboimPanelists: Elisa Giaccardi, Laura Forlano, Christopher Frauenberger, Marc Hassenzahl, Clara Mancini, and Ron Wakkary

The last decade has witnessed the expansion of design space to include the epistemologies and methodologies of more-than-human design (MTHD). Design researchers and practitioners have been increasingly studying, designing for, and designing with nonhumans. This panel will bring together HCI experts who work on MTHD with different nonhumans as their subjects. Panelists will engage the audience through discussion of their shared and diverging visions, perspectives, and experiences, and through suggestions for opportunities and challenges for the future of MTHD. The panel will provoke the audience into reflecting on how the emergence of MTHD signals a paradigm shift in HCI and human-centered design, what benefits this shift might bring and whether MTH should become the mainstream approach, as well as how to involve nonhumans in design and research.

Panel video: https://drive.google.com/file/d/1xAHlncFRI7sX_AMUNJWXPbrbMywpF2wN/view

----Suscribe to the newsletter to receive updates on publications and workshops from the MTHD network (only twice a year):

https://mailchi.mp/003e43e766fd/more-than-human

To share opportunnities with the MTHD network, please drop me an email to i.nicenboim@tudelft.nl

More-than-humanDesign and AI:

In Conversation with Agents

Organised by Iohanna Nicenboim, Elisa Giaccard, Marie Louise Juul Søndergaard, Anuradha Venugopal Reddy, Yolande Strengers, James Pierce, and Johan Redström

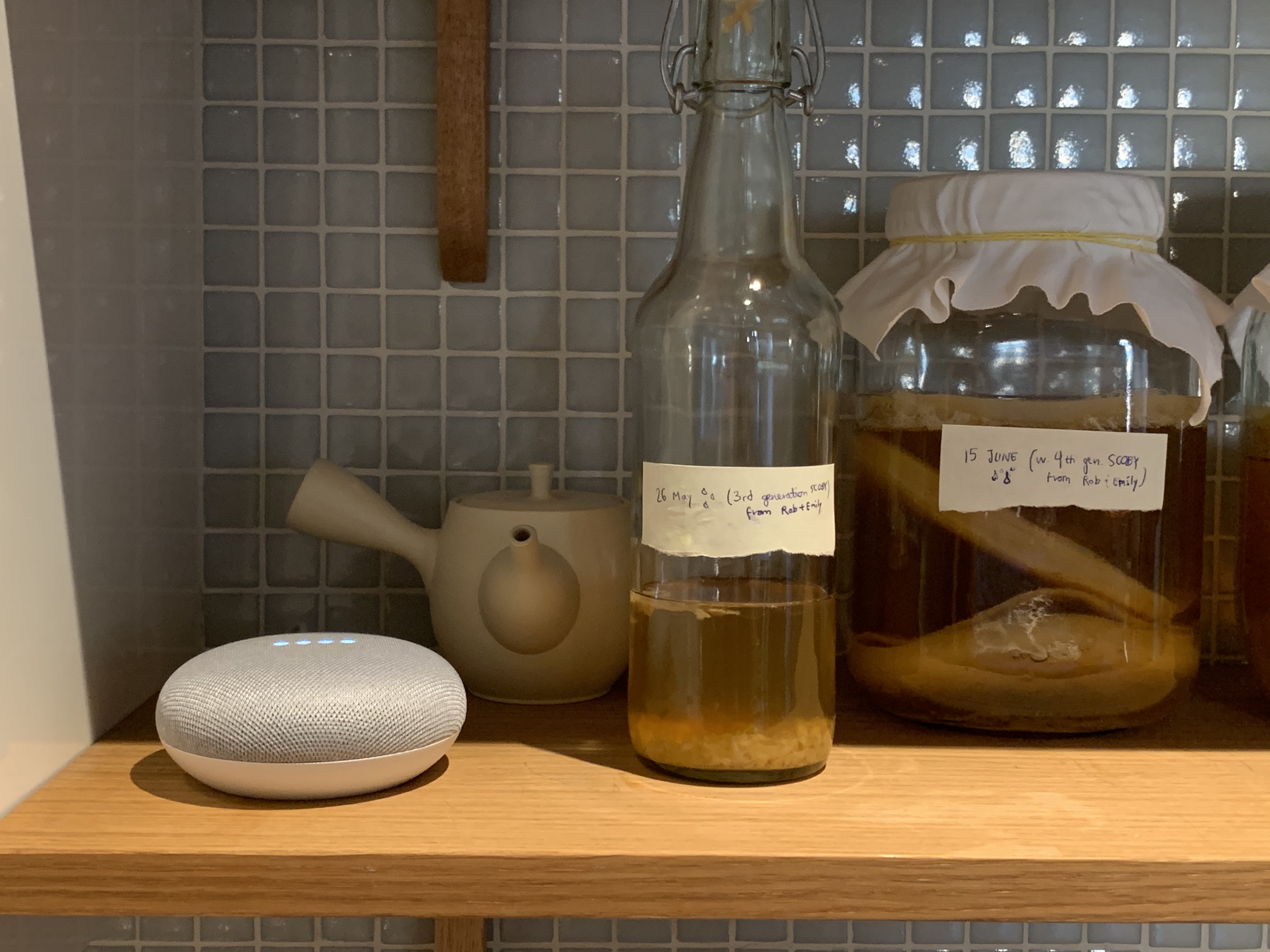

The workshop brought together HCI researchers, designers, and practitioners to explore how to study and design (with) AI agents from a more-than-human design perspective. Through design activities, we experimented with Thing Ethnography (Giaccardi et al. 2016, Giaccardi, 2020) and Material Speculations (Wakkary et al. 2015), as a starting point to map and possibly integrate emergent methodologies for more-than-human design, and align them with third-wave HCI. The workshop was conducted in four sessions across different regions in the world, and had about 35 participants. We explored AI agents from a more-than-human design perspective along three themes.

The workshop was organised as part of DIS (ACM Designing Interactive Systems Conference). You can read the workshop publication at the ACM digital library.

Iohanna Nicenboim, Elisa Giaccardi, Marie Louise Juul Søndergaard, Anuradha Venugopal Reddy, Yolande Strengers, James Pierce, and Johan Redström. 2020. More-Than-Human Design and AI: In Conversation with Agents. In Companion Publication of the 2020 ACM Designing Interactive Systems Conference (DIS' 20 Companion). Association for Computing Machinery, New York, NY, USA, 397–400. DOI:https://doi.org/10.1145/3393914.3395912

Documentation

![]()

![]()

![]()

One of the workshop’s outcomes was a questionnaire for conversational agent to probe them in issues of gender, identity, ownership, and others. Try asking some of these questions to your conversational agent (such as Alexa, Siri, Google Home, or others)!